** If the file is on sharepoint go ahead and save as csv utf-8 and then change the extension to. It should also work on an excel file without table format as long as you convert to csv the same way and same extension requirement. You can easily convert it by saving excel file as CSV UTF-8 comma delimited in save as options. If you see different characters it is an excel based file and won't work.Īn excel based file is of type object and can't be read as a string in this flow. The best way to find out if it will work is to right click it on your computer and choose to open it in word pad or note pad. Note: any file with csv extension will probably show with an excel icon. The csv file must be text based as in saved as plain text with a csv extension or txt in some cases.

Questions: If you have any issues running it, most likely I can figure it out for you.Īnything else we should know: You can easily change the trigger type to be scheduled, manual, or when a certain event occurs.

Note: This flow can take a long time to run, the more rows with commas in the columns the longer.ĩ-25-20 - A new version of the flow is available, it is optimized and should run 40% to 50% faster. Make sure the count is over the number of loops, the count just prevents it from looping indefinitely. It will detect how many loops it needs to perform. So if you have 5,000 rows it will loop 25 times. The flow is set up to process 200 rows per loop and should not be changed or due to the nesting of loops it may go over the limit. This step will control how many records you are going to process. If you have number types remove the quotes (around the variables) at this step where the items are appended and you probably need to replace the comma out of the money value (replace with nothing). In this step of you JSON notice my values have quotes because mine are all string type, even the cost. You will need to modify the JSON schema to match your column names and types, you should be able to see where the column names and types are within the properties brackets. Please read the comments within the flow steps, they should explain the using this flow.īe sure to watch where your money columns or other columns with commas fall as they have commas replaced by asterisk in this flow, when you write the data you need to find the money columns to remove the commas since it will most likely go into a currency column.Īlso check the JSON step carefully since it will let you access your columns dynamically in the following steps. When you change the get file content action it will remove any references to it, this is where it belongs though. My file in the example is located on premise.

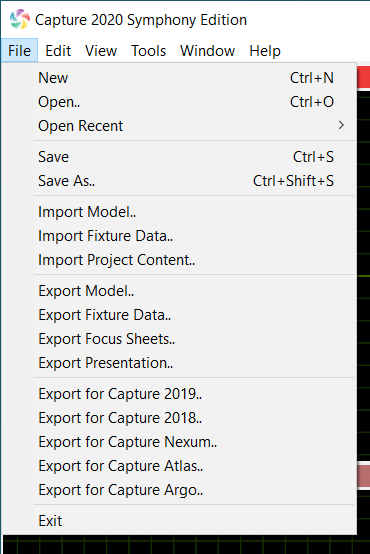

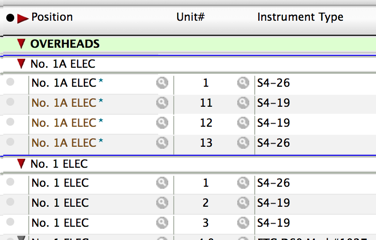

You only need to change the action where I get the file content from, such as sharepoint get file content, onedrive get file content. Import the package attached below into your own environment. ** If you need to add or remove columns you can, follow the formatting and pattern in the "append json string items" and anywhere else columns are accessed by column indexes with 0 being first column - for example "variables('variable')" or "split(. This will cut down in the run time by even another 30% to 40%. We don't recreate the JSON, we simply begin to write our data columns by accessing them via column indexes (apply to each actions). This step is where the main difference is at. You can easily access the columns in the compact version by using the column indexes. One is a compact flow that just removes the JSON accessible dynamic columns. Description: This flow allows you to import csv files into a destination table.Īctually I have uploaded 2 flows.

0 kommentar(er)

0 kommentar(er)